This blog is the latest post an ongoing series about GitLab’s journey to build and integrate AI/ML into our DevSecOps platform. The first blog post can be found here. Throughout the series, we’ll feature blogs from our product, engineering, and UX teams to showcase how we’re infusing AI/ML into GitLab.

GitLab surfaces vulnerabilities that contain relevant information. However, more often users aren't sure where to start. It takes time to research and synthesize information that is surfaced within the vulnerability record. Moreover, figuring out how to fix a given vulnerability can be difficult.

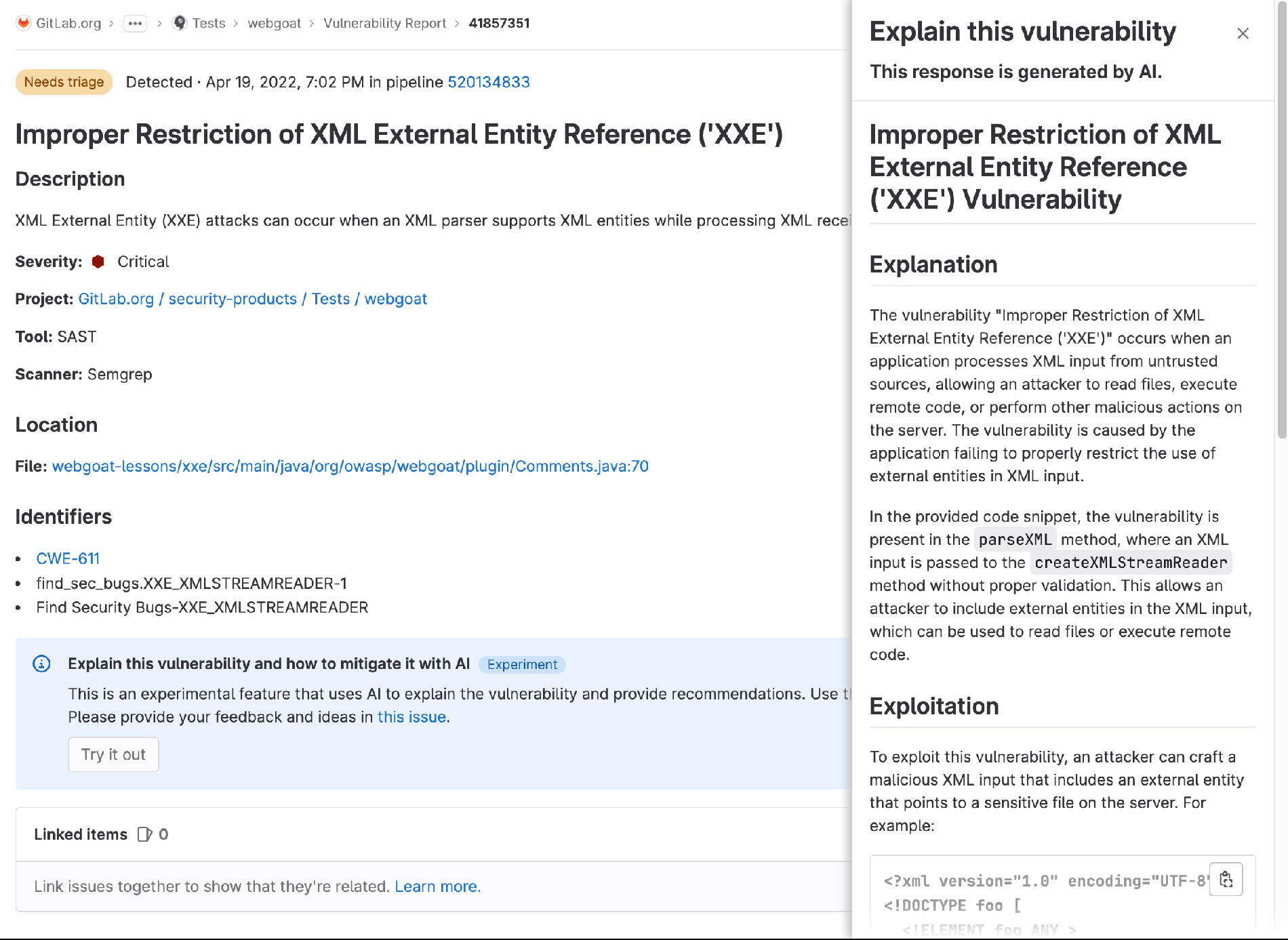

To help teams identify an effective way to fix a vulnerability within the context of their specific code base, we have released an experimental feature that provides GitLab AI-assisted vulnerability recommendations leveraging the explanatory power of large language models. This capability combines basic vulnerability information with insights derived from the customer's code to explain the vulnerability in context, demonstrate how it can be exploited, and provide an example fix.

Isaac Dawson and Dinesh Bolkensteyn, both GitLab Vulnerability Research engineers, tested prompts in a large language model to see if prompts could yield helpful results. After fine-tuning the prompts, they found that some prompts could provide better details and even suggest recommendations for a fix to vulnerabilities related to static application security testing (SAST). In a week's time, Product Designer Becka Lippert designed a prototype and Daniel Tian, Mo Khan, and Neil McCorrison built this experimental feature in GitLab.

This feature is powered by Google AI. Learn more about our partnership with Google Cloud to enrich GitLab features with generative AI.

You can explore the "explain this vulnerability" feature with a click-through demo.

Iterating on AI/ML features

This experimental feature is available in GitLab.com today. We are exploring what this feature could look like for other types of vulnerabilities beyond SAST and in a merge request. Have an idea that would make this feature better? Please share it with us, along with any feedback, in this issue.

This experiment is just the start of the ways we're looking to infuse GitLab with AI/ML capabilities to help GitLab users become more efficient and effective at their jobs. We are looking across the software development lifecycle for painful and time-consuming tasks that are ideal for AI Assisted features. We'll continue to share these demos throughout this blog series.

Interested in using these AI-generated features? Join our waitlist and share your ideas.

Continue reading our ongoing series, "AI/ML in DevSecOps".

Disclaimer: This blog contains information related to upcoming products, features, and functionality. It is important to note that the information in this blog post is for informational purposes only. Please do not rely on this information for purchasing or planning purposes. As with all projects, the items mentioned in this blog and linked pages are subject to change or delay. The development, release, and timing of any products, features, or functionality remain at the sole discretion of GitLab.