Improving the performance and reliability of GitLab.com has always been a top priority for GitLab. While we continuously make iterative improvements to GitLab and our production architecture, we anticipate making a larger change to improve the scalability and reliability of GitLab.com: We are splitting our single PostgreSQL database into a main and a ci database.

We believe this process, also known as functional decomposition, will increase GitLab's database capacity by roughly 2x and allows GitLab.com to continue to scale.

When will the split take place and what does this mean for users of GitLab.com?

This change is planned to take place between Saturday, 2022-07-02, 05:00am UTC and Saturday, 2022-07-02, 09:00am UTC. The implementation of this change is anticipated to include a service downtime of up to 120 minutes between Saturday, 2022-07-02, 06:00am to 08:00am UTC. During this time you will experience complete service disruption of GitLab.com.

We are taking downtime to ensure that the application works as expected following the split and to minimize the risk of any data integrity issues.

Background

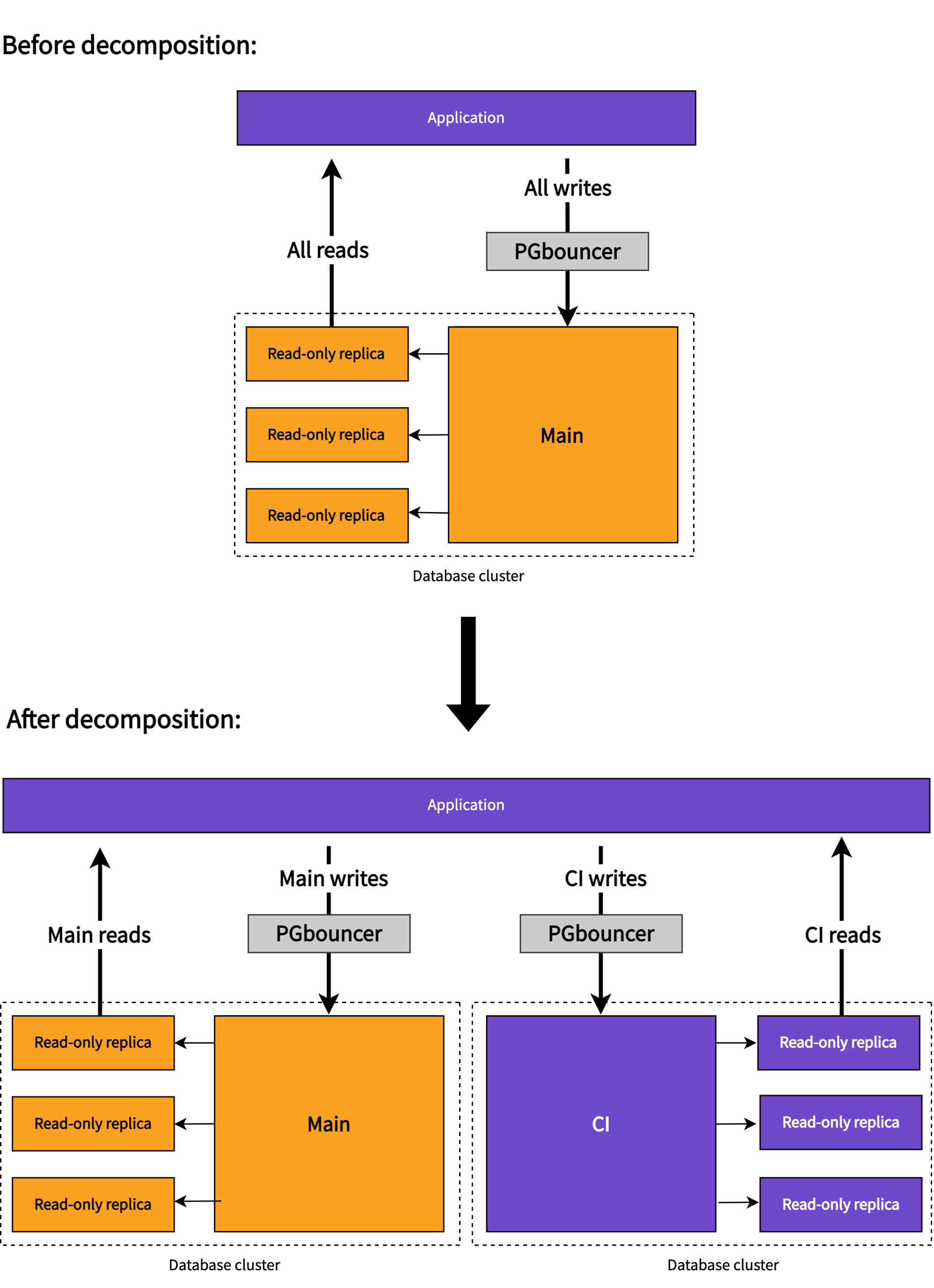

GitLab.com's database architecture uses a single PostgreSQL database cluster. This single cluster (let's call it main), consists of a single primary and multiple read-only replicas and stores the data generated by all GitLab features. Database reads can be scaled horizontally through read-only replicas, but writes cannot because PostgreSQL does not support active-active replication natively.

A large portion of all writes are generated by features related to Continuous Integration (CI). So, to scale GitLab.com's database capacity, we are splitting the single PostgreSQL main cluster into two clusters:

- A Continuous Integration database cluster for all CI-related features (

ci). - A database cluster for all other features (

main).

At a high level, GitLab.com's database architecture is changing like this:

You can learn more by visiting our public epic: Decompose GitLab.com's database to improve scalability.

Impact

Splitting our database into main and ci initially will only impact GitLab.com. To ensure consistency, we plan to enable decomposition for self-managed GitLab instances later. While this split is a significant architectural change that we believe will increase GitLab's database capacity by roughly 2x, there are other benefits as well.

Increased performance

By running two separate database clusters, we believe we will increase the overall count of available database connections. This means we can serve more traffic. It also means that during peak hours there is more buffer, which reduces the likelihood of congestion that may cause performance and UX degradations.

Another significant advantage is that we anticipate we will be able to tune the main and ci databases independently, allowing us to optimize these different workloads.

Increased stability

Splitting the database cluster into main and ci means that ci writes are shifting to the ci database cluster. We anticipate this will lead to reduced database saturation, which is a major cause of incidents. Consequently, we believe that the overall stability of GitLab.com may increase following the split.

We believe increased stability means that development teams can spend more time working on generating value through new features and other improvements and less time guarding against potential issues.

Shipping as fast as ever

A primary objective of this project was to provide tooling to our development teams so that they can continue to develop features that use multiple databases. All of these tools, for example loose foreign keys or new data migrations for multiple databases, are available already and used in production.

With these tools in place, we expect that teams will be able to ship features as fast as before.

Tools and dashboards re-use

This change does introduce additional complexity. After all, we will run another database cluster. Given that the ci cluster is almost identical to the existing main cluster, our DBREs and SREs are able to re-use almost all tools (for example for backups) and dashboards. This reduces the overall risk introduced by this change.

How we are preparing for the split

Over the last year, many teams at GitLab have worked to support running GitLab using multiple databases. In total, more than 600 merge requests made it into the product. Because we chose a phased rollout approach, almost all developed capabilities are already running on our production systems.

- We've already provisioned a standby-cluster that serves CI read-only data but, crucially, not writes on GitLab.com. This increases our confidence that this cluster is correctly provisioned and fully functional.

- We've also split out all CI write traffic into a separate connection. From an application standpoint, it appears as if we are already using a

ciand amaindatabase. This gives us confidence that the application changes are working correctly. - Our CI pipelines also fully support running against multiple databases and all tests are passing.

What is left is promoting the ci standby cluster so that all CI reads and writes are accepted on that cluster.

How we're working to ensure a smooth split

The Pods group is working closely with our SREs, DBREs and Quality to rehearse for the production change. These rehearsals include dry runs, executing the promotion of the ci database cluster, and testing our rollback strategies. All of these steps are tracked as part of a CI decomposition change template. This template is continuously improved to ensure that we capture all learnings from the rehearsals. The template is mirrored onto our Ops GitLab instance, which will remain available during the downtime window and forms the basis for executing the change.

The general process of the split can be described as follows:

- Running health checks

- Stopping all incoming traffic

- Promoting the CI database cluster to take reads and writes

- Running QA

- Allowing incoming traffic

We have developed and are extensively testing rollback plans.

A detailed timeline is available and we publish daily asynchronous technical updates of our progress.