A couple of months ago, my Pulumi colleague Sean Holung, staff sofware engineer, and I had the opportunity to present "Are you about to break prod? Acceptance Testing with Ephemeral Environments" at KubeCon NA 2019. In this talk, we covered what is an ephemeral environment, how to create one, and then we walked the audience through a concrete example. Given our limited time, we had to move quickly through a ton of information. This post will recap our presentation and add a few more details we weren't able to cover.

As software engineers, our job is to deliver business value. To do this, we need to be delivering software both quickly and reliably.

So the question we ask you is: are you about to break prod? Everyone will break production at some point because there are things we miss. As independent software lead Alexandra Johnson sums up so well in a tweet: "Failures are part of the cost of building and shipping large systems." Building a robust pipeline allows us to move quickly in the case of failure and gain confidence around making changes to our infrastructure and applications.

Big takeaway from #KubeCon: none of us want to break prod, but failures are part of the cost of building and shipping large systems. Using tools like #AcceptanceTesting (@eckrengel) and #ChaosEngineering (@Ana_M_Medina) can increase your confidence in your infrastructure changes!

— Alexandra Johnson (@alexandraj777) November 23, 2019

With this in mind, we use Pulumi and GitLab to build a pipeline that validates both our application, infrastructure, and deployment process.

Ephemeral environments

What is an ephemeral environment? It is a short-lived environment that mimics a production environment. To maintain agility, boundaries are defined in the environment to only encompass the first-level dependencies of the particular microservice that is being deployed. It means you don't have to spin up every single microservice or piece of infrastructure that's running in production. Yet you may need to spin up extra pieces of infrastructure to properly test the microservice. For example, you may need to create a subscription to pull from a PubSub topic your microservice writes to. This subscription would allow your acceptance tests to pull from a topic in order to validate an outbound message is published.

Why this is important

Infrastructure is a key part of an application's behavior. The architecture and requirements are continually evolving. How can you incorporate these into a testing suite to give us a high degree of confidence?

Ephemeral environments allow you to integrate infrastructure and deployment processes into a testing suite. They ensure your testing environment is always in-sync with production and therefore allow you to iterate quickly to meet new requirements.

Ephemeral environments also encourage you to lean on automated tests over manual tests. If you use ephemeral environments as a replacement for a testing environment, there is not enough time to go in and run a manual check. Shifting your mindset to automated tests can be challenging, yet it's imperative that we do so. Automated tests guarantee your application behaves as expected today as well as months from now when you're out on vacation.

Our demo application

To demonstrate the effectiveness of integrating acceptance testing with ephemeral environments into your deployment process, we created a simple demo application. The service is written in Go and accepts a message on the /message endpoint, then places it in a storage bucket and sends a notification about the new object on a PubSub topic. The code for this application lives in our main.go file. While you can walk through this code yourself, the most important thing to call out is that our application is configurable. This means we take configuration in at the very beginning of our main function and shut down the application if the values are not present.

func main() {

...

// Get configuration from environment variables. These are

// required configuration values, so we use an helper

// function get the values and exit if the value is not set.

project := getConfigurationValue("PROJECT")

topicName := getConfigurationValue("TOPIC")

bucketName := getConfigurationValue("BUCKET")

...

}

func getConfigurationValue(envVar string) string {

value := os.Getenv(envVar)

if value == "" {

log.Fatalf("%s not set", envVar)

}

log.Printf("%s: %s", envVar, value)

return value

}

Infrastructure

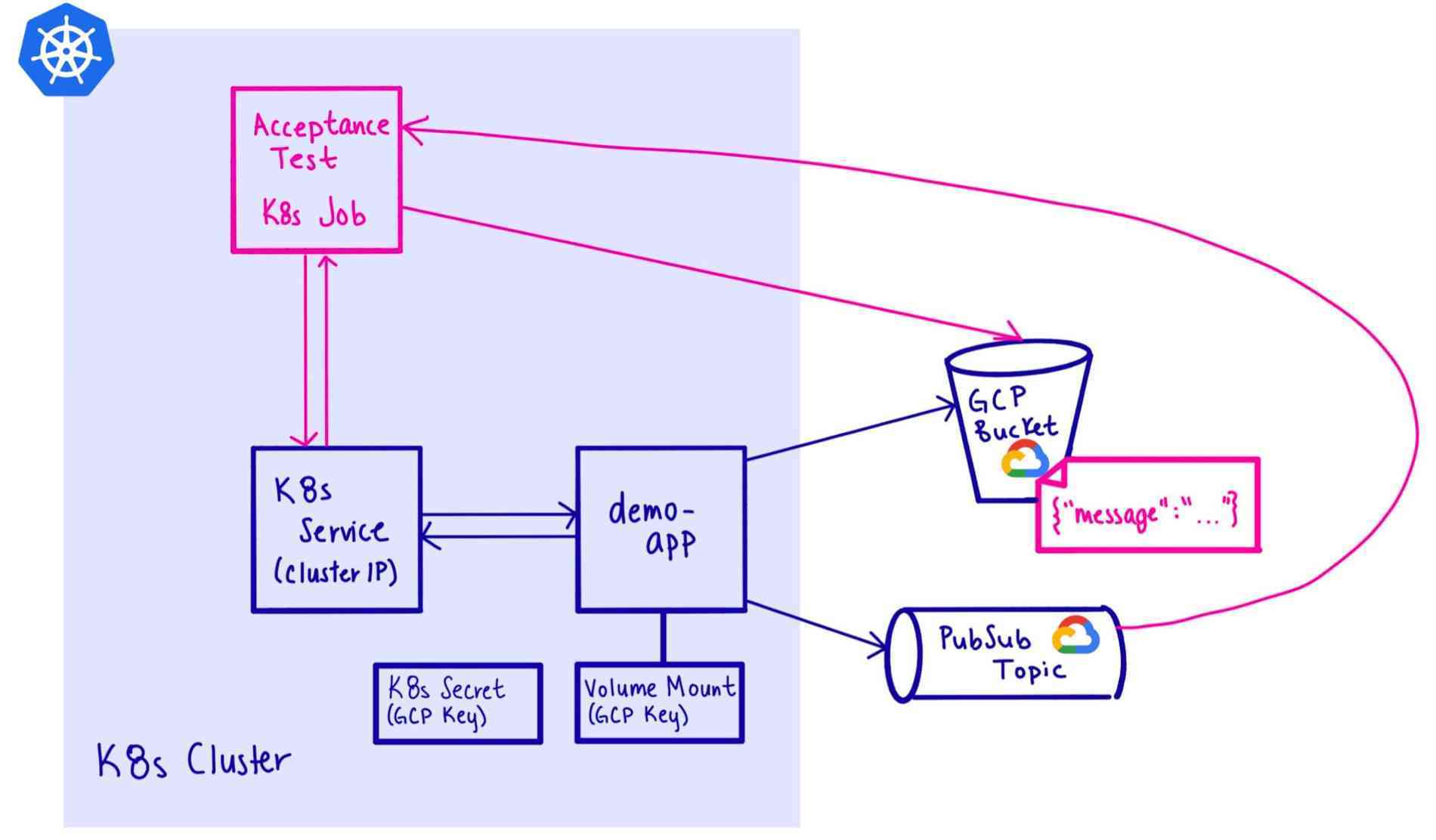

There are many pieces of infrastructure to spin up and we can use Pulumi to easily wire it all together. Our architecture looks like this:

You can check out the Pulumi code that we use to reproduce both our ephemeral environments as well as production in the infrastructure/index.ts file. The neat thing about using Pulumi is that we can create the Google Cloud Platform (GCP) resources we need and then directly reference them in our Kubernetes deployment. Using Pulumi ensures we're always configuring our application with the correct GCP resources for that environment.

For example, in our Kubernetes deployment, we set the environment variables by using the topic and bucket variables created just above.

// Create a K8s Deployment for our application.

const appLabels = { appClass: name };

const deployment = new k8s.apps.v1.Deployment(name, {

metadata: { labels: appLabels },

spec: {

selector: { matchLabels: appLabels },

template: {

metadata: { labels: appLabels },

spec: {

containers: [{

...

env: [

{ name: "TOPIC", value: topic.name }, // referencing topic just created

{ name: "BUCKET", value: bucket.name }, // referencing bucket just created

{ name: "PROJECT", value: project },

{

name: "GOOGLE_APPLICATION_CREDENTIALS",

value: "/var/secrets/google/key.json"

},

],

...

}]

}

}

},

});

Acceptance tests

The acceptance tests validate that our service, when stood up, functions as expected. They are run against an ephemeral environment. The tests live in the acceptance/acceptance_test.go file. You'll notice we're once again using the helper function getConfigurationValue. Our acceptance test must also be configured to ensure they're validating against the correct resources for that particular ephemeral environment.

Since the service is only accessible from within the Kubernetes cluster, we use a Kubernetes job to run our acceptance tests. Using a Kubernetes job is a good technique to use when your CI is running externally, such as from GitLab, and you do not want to expose your service publicly. Our ephemeral environment plus acceptance test looks like this:

We spin up a Kubernetes Job and additional resources by using an if statement at the bottom of our infrastructure/index.ts file. The conditional depends on the environment's name as follows:

// If it's a test environment, set up acceptance tests.

let job: k8s.batch.v1.Job | undefined;

if (ENV.startsWith("test")) {

job = acceptance.setupAcceptanceTests({

...

});

}

// Export the acceptance job name, so we can get the logs from our

// acceptance tests.

export const acceptanceJobName = job ? job.metadata.name : "unapplicable";

That covers all the major aspects of our application and infrastructure, and if you'd like to view the code in detail, it is available in our demo-app GitLab repository.

Our pipeline

When developing a new service, we must establish a solid deployment strategy upfront. We want to make sure we're building in quality from day one. As we develop the service, we can add acceptance tests for every feature we add while the context and requirements are still fresh in our minds. This ensures we have thorough coverage of our app's functionality.

We used GitLab to set up our pipeline. We chose GitLab because it's straightforward to set up and allows us to run our pipeline on our Docker image of choice. We use a base-image that has all our dependencies installed and then reference that Docker image and tag in our demo-app pipeline. The Docker image allows us to bundle and version the dependencies for building our application and infrastructure.

-

Test and Build - This runs our unit tests and builds both our application and acceptance test images. To build our images, we used Kaniko, a tool for building images within a container or Kubernetes cluster. GitLab has excellent documentation on how to incorporate Kaniko into your pipeline. The application image is an immutable image that is used for both running our acceptance tests and deploying to production.

-

Acceptance Test - This is what spins up our ephemeral environments and runs our acceptance tests. This acts as a quality gate catching issues before production.

Our ephemeral environment and Kubernetes job are all spun up in the

scriptportion of the acceptance test job definition. We do a bit of setup for our new acceptance test stack and then runpulumi up. Here is the print out from our acceptance tests.... $ pulumi stack init rocore/$ENV-app Logging in using access token from PULUMI_ACCESS_TOKEN Created stack 'rocore/test-96425413-app' $ pulumi config set DOCKER_TAG $DOCKER_TAG $ pulumi config set ENV $ENV $ pulumi config set gcp:project rocore-k8s $ pulumi config set gcp:zone us-west1-a $ pulumi up --skip-preview Updating (rocore/test-96425413-app): ... Resources: + 16 created Duration: 4m10s Permalink: https://app.pulumi.com/rocore/demo-app/test-96425413-app/updates/1The

after_scriptdestroys our stack as well as prints the logs of both our Kubernetes job and deployment, which help with debugging if our tests were to fail. We use theafter_scriptto make sure that we always clean up and print logs even when our acceptance tests fail.... $ pulumi stack select rocore/$ENV-app $ kubectl logs -n rocore --selector=appClass=$ENV-demo-app-acc-test --tail=200 === RUN TestSimpleHappyPath === RUN TestSimpleHappyPath/message_is_sent_to_PubSub_topic === RUN TestSimpleHappyPath/message_is_stored_in_bucket