In our just-released survey of over 4,000 developers, security professionals, and operations team members, there was one thing everyone agreed on: 50% of each group said software testing is the biggest reason why development is delayed. Testers have long been the underdogs in the SDLC and that viewpoint is apparently very slow to change. To understand what’s really going on, and how things work at GitLab, we asked Mek Stittri, director of quality engineering, to share his perspective on what’s working with test today and what’s in need of improvement.

Why is test a continued DevOps problem?

It’s a two-part answer, Mek says. First, there are simply not enough tests run and second, the tests that are used are often flaky (meaning their results aren’t necessarily trustworthy).

Tackling the issue of not running enough tests, Mek says it’s an area GitLab is addressing. “At GitLab, I think we are better than other companies where developers write unit tests and integration tests every time a change goes in,” he says. “That is great, but that testing is at a lower level, and it doesn't really map to a business use case.” To write better tests a team needs test requirements, but there can be so many different sets of stakeholders that it can be tough to get their input about test requirements and not just feature requirements. “We are improving it here at GitLab where our VP of Product Scott Williamson is doing a great job. We have a section for test requirements right now (in the issue and merge request templates). It's now a blank and free form for people to fill in, but it should be highlighted going forward as a required section taking input from product discovery and validation as a deliverable.”

The bottom line: the stakeholders who are delivering the code need to understand the end goal better. “Unit tests test code at a smaller scale, and that’s great, but it doesn’t really verify the functionality works end to end as a whole. We need more coverage and more understanding of what needs to be tested.”

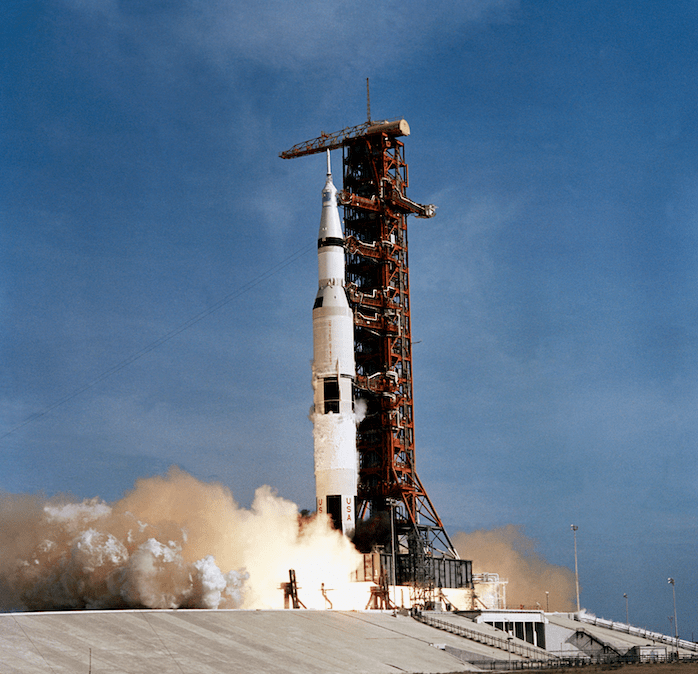

Apollo 11 is held up by a framework and software is no different.

Apollo 11 is held up by a framework and software is no different.

Mek likens this process to Apollo 11. Everyone is excited about the rocket (the software features, in other words) but no one pays attention to the red scaffolding on the right that’s actually holding the rocket up. “That’s the side that nobody looks at but it’s a lot of work,” he says. “It’s taller than the rocket. We need to build that platform to have adequate testing (functional, performance, etc).” The ideal situation to get a company there? Start building the test framework and add test coverage at the exact same time the product is being built. “You assemble it together, run it, it’s passing and we go for launch and it’s shipped. We’re not there yet. And I can assure you a lot of companies out there aren’t there yet either.”

About those flaky tests…

“There are a lot of test automation engineers and test developers out there, but not all of them know how to write and design a good test,” Mek explains. Automated tests needs to function like a flow of self-retrying dominoes where if one step is not completed it needs to keep retrying to reach the next step. Tests need to mimic what a manual tester would do, he says. No manual tester is going to click on a button and then wait 10 minutes. The tester will click again, or try other strategies. “At GitLab we put emphasis on test framework reliability and we treat each user workflow step like a piece of retrying dominoes. We need to make sure all the dominoes fall over so the workflow is completed,” Mek says.

We need more coverage and more understanding of what needs to be tested.

So companies need to think through how the tests work, but also test the right things. If that happens, quality can be everyone’s responsibility in the end, Mek says. “We want developers to contribute to the end-to-end test so you want to make a test framework that is easy to use and easy to read. I think this all factors in.” And Mek points out it really is in everyone’s best interests to think about quality first. “Let's make the process better so we work smarter, right? We achieve more without having to work weekends or get pinged during your family dinner. Nobody wants that.”

Test automation and machine learning

Test automation is a cornerstone of successful DevOps but it remains difficult for many companies to achieve. Mek’s take: “We need to design the product such that the test automation framework can integrate into it well,” he says flatly. That requires good collaboration with development teams due to frontend UI locators and backend APIs that are the interfaces to enable better and stable test automation. “Go back to Apollo 11,” Mek says. “It's like the connections along the rocket's fuselage. I need to integrate with this to make sure things are working fine. The probes and sensors need to be there. So if those aren't there, then your test automation engineers need to code around these obstacles. It's not working smart.” In other words, the test automation framework should not take the longer route when executing user interactions to the application because this can be the source of unstable and in-efficient tests.

One step that can help companies – including GitLab – get there is machine learning. “We are having discussions here at GitLab about where we want a bot,” Mek says. “I think machine learning will come and help, but the input and output needs to be clearly defined so you have a clear implementation direction, TensorFlow, Linear Regression, or whatever techniques. You can write a bot that just lives in the product, meaning it looks at all the UI locators (dedicated to test automation) on a page and randomly clicks one of those links.” This GitLab bot of the future will work 24/7, clicking, clicking, clicking on the page until it errors out or runs into a 404, Mek says. The goal is to create a bot that is like a “menacing QA engineer” that can be programmed to keep banging on the problematic areas until everything is solved. To get there will require lots of data – machine learning literally needs to learn from data and experience – and although there are a handful of companies experimenting with this now, this is all still very early stage.

Where we’re headed with testing

Mek and his team hope to increase both quality and productivity this year which may be a bit of a balancing act, since more “quality” equals more testing which can result in a longer development cycle and perhaps reduced productivity (this is why we say test automation engineers are often unappreciated!). “My department is working this quarter to have a full suite of automated tests for our enterprise features. We want to have a big checkbox for the enterprise features every time we deploy. We need this because it is mapping to the business use case.” But Mek and team need to do all of that while shortening the test runtime for developers. “You want more test coverage but we need to keep the runtime low because we can’t have developers and release managers wait two hours.”

The plan is to add more runners, optimize them, de-duplicate some tests and make sure the process is as streamlined as it can be. “Right now it takes about an hour or so, but I would love to have it down to 30 minutes where we certify that this merge request going in checks all the boxes and all the enterprise features are not broken. We need to set ourselves an aggressive goal and I would say 30 minutes is a good first step.”

Cover image by Kurt Cotoaga on Unsplash