Updated on: September 9, 2025

10 min read

Measuring AI ROI at scale: A practical guide to GitLab Duo Analytics

Learn how to transform raw usage data into actionable business insights and ROI calculations with this in-depth tutorial.

AI investment starts with measurement. Building a successful AI-powered development platform begins with understanding actual usage, adoption patterns, and quantifiable business value — especially ROI from GitLab Duo Enterprise.

To help our customers maximize their AI investments, we developed the GitLab Duo Analytics solution as part of our Duo Accelerator program — a comprehensive, customer-driven solution that transforms raw usage data into actionable business insights and ROI calculations. This is not a GitLab product, but rather a specialized enablement tool we created to address immediate analytics needs while organizations transition toward comprehensive AI productivity measurement.

This foundation enables broader AI transformation. For example, organizations can use these insights to optimize license allocation, identify high-value use cases, and build compelling business cases for expanding AI adoption across development teams.

A leading financial services organization partnered with a GitLab customer success architect through the Duo Accelerator program to gain visibility into their GitLab Duo Enterprise investment. Together, we implemented a hybrid analytics solution that combines monthly data collection with real-time API integration, creating a scalable foundation for measuring AI productivity gains and optimizing license utilization at enterprise scale.

The challenge: Measuring AI ROI in enterprise development

Before implementing any analytics solution, it's essential to understand your AI measurement landscape.

Consider:

-

What GitLab Duo features need measurement? (code suggestions, chat assistance, security scanning)?

-

Who are your AI users? (developers, security teams, DevOps engineers)?

-

What business metrics matter? (time savings, productivity gains, cost optimization)?

-

How does your current data collection work (manual exports, API integration, existing tooling)?

Use this stage to define your:

-

ROI measurement framework

-

Key performance indicators (KPIs)

-

Data collection strategy

-

Stakeholder reporting requirements

Sample ROI measurement framework

Step-by-step implementation guide

Important: The solution below describes an open source approach that you can deploy in your own environment. It is NOT a commercial product from GitLab that you need to purchase. You should be able download, customize, and run this solution free of charge.

Prerequisites

Before starting, ensure you have:

-

GitLab instance with Duo enabled

-

GitLab API token with read permissions

-

Access to configure GitLab CI/CD variables

-

Basic understanding of GitLab CI/CD pipelines

1. Initial setup and configuration

Let's set up the project environment by first cloning the repository.

git clone https://gitlab.com/gitlab-org/professional-services-automation/tools/utilities/gitlab-graphql-api.git

cd gitlab-graphql-api

Then push it to your own GitLab instance or namespace, and navigate to your project in GitLab to configure the CI/CD variables.

2. Configure pipeline control variables

Control which analytics pipelines run by setting Project CI/CD Variables. Go to Project Settings → CI/CD → Variables and add:

| Variable | Default | Description |

|---|---|---|

ENABLE_DUO_METRICS |

"true" | Enable/disable Duo AI metrics pipeline |

ENABLE_PROJECT_METRICS |

"false" | Enable/disable traditional project metrics pipeline |

Example configurations:

- Duo Only:

ENABLE_DUO_METRICS="true",ENABLE_PROJECT_METRICS="false" - Both Dashboards:

ENABLE_DUO_METRICS="true",ENABLE_PROJECT_METRICS="true" - Disable All:

ENABLE_DUO_METRICS="false",ENABLE_PROJECT_METRICS="false"

3. Configure data ingestion

Configure the data ingestion in your .gitlab-ci.yml file. This creates raw project metadata shared across all metrics.

Basic configuration variables:

variables:

GROUP_PATH: "gitlab-org/professional-services-automation" # Your group path

INCLUDE_SIMPLE_FIELDS: "fullPath,name,description" # Fields to fetch

ARGUMENT_FIELDS: "mergeRequests" # Argument fields requiring additional config

LIMIT: "100" # Projects per API call

MAX_ITERATIONS: "2" # Maximum API calls (for testing)

GITLAB_GRAPHQL_API_VERSION: "0.1.0" # API version from Package Registry

Configure argument fields for specific metrics:

For each metric you want to collect, define argument field variables:

# Example: Merged Merge Requests

ARGUMENT_FIELD_1_NAME: "mergeRequests"

ARGUMENT_FIELD_1_FILTER_NAME: "state"

ARGUMENT_FIELD_1_FILTER_VALUE: "merged"

ARGUMENT_FIELD_1_RETURN_VALUES: "count totalTimeToMerge"

# Example: Packages Count

ARGUMENT_FIELD_2_NAME: "packages"

ARGUMENT_FIELD_2_RETURN_VALUES: "count"

4. Configuring metrics aggregation

After data ingestion, set up aggregation rules in .gitlab/Schedule.gitlab-ci.yml for each metric you want to generate.

Example metric job configuration:

process_average_time_to_merge:

<<: *process_data_template

stage: process_data

variables:

METRIC_NAME: "9_Average_Time_To_Merge"

BUSINESS_LEVEL_START: 2

BUSINESS_LEVEL_END: 4

AGGREGATE_COLUMNS: "mergeRequests_state_merged_totalTimeToMerge:sum mergeRequests_state_merged_count:sum"

NEW_COLUMN_NAME: "average_time_to_merge_days"

NEW_COLUMN_FORMULA: "mergeRequests_state_merged_totalTimeToMerge / mergeRequests_state_merged_count / (24 * 60 * 60)"

SORT_BY: "average_time_to_merge_days"

FILTER_CONDITION: "mergeRequests_state_merged_count >= 5"

#### Required variables for each metric:

| Variable | Description | Example |

|---|---|---|

METRIC_NAME |

Name of the metric (used in file naming) | "9_Average_Time_To_Merge" |

BUSINESS_LEVEL_START |

Starting level for business hierarchy | 1 |

BUSINESS_LEVEL_END |

Ending level for business hierarchy | 3 |

AGGREGATE_COLUMNS |

Columns to aggregate with function | "mergeRequests_state_merged_count:sum" |

SORT_BY |

Column to sort results by | "average_time_to_merge_days" |

5. Run scheduled pipeline to configure analytics

Once configured, run a scheduled pipeline to generate your analytics:

- Go to CI/CD → Schedules

- Create a new schedule or run an existing one

- The pipeline will automatically:

- Ingest data based on your configuration

- Aggregate metrics according to your rules

- Deploy dashboards to GitLab Pages

6. Access your analytics dashboards

After the scheduled pipeline completes successfully, GitLab Pages automatically deploys your dashboards:

- Project Metrics Dashboard:

https://your-username.gitlab.io/project-name/existing-metrics/ - Duo Metrics Dashboard:

https://your-username.gitlab.io/project-name/duo-metrics/ - Main Landing Page:

https://your-username.gitlab.io/project-name/

The main landing page automatically detects which dashboards are available and displays appropriate links.

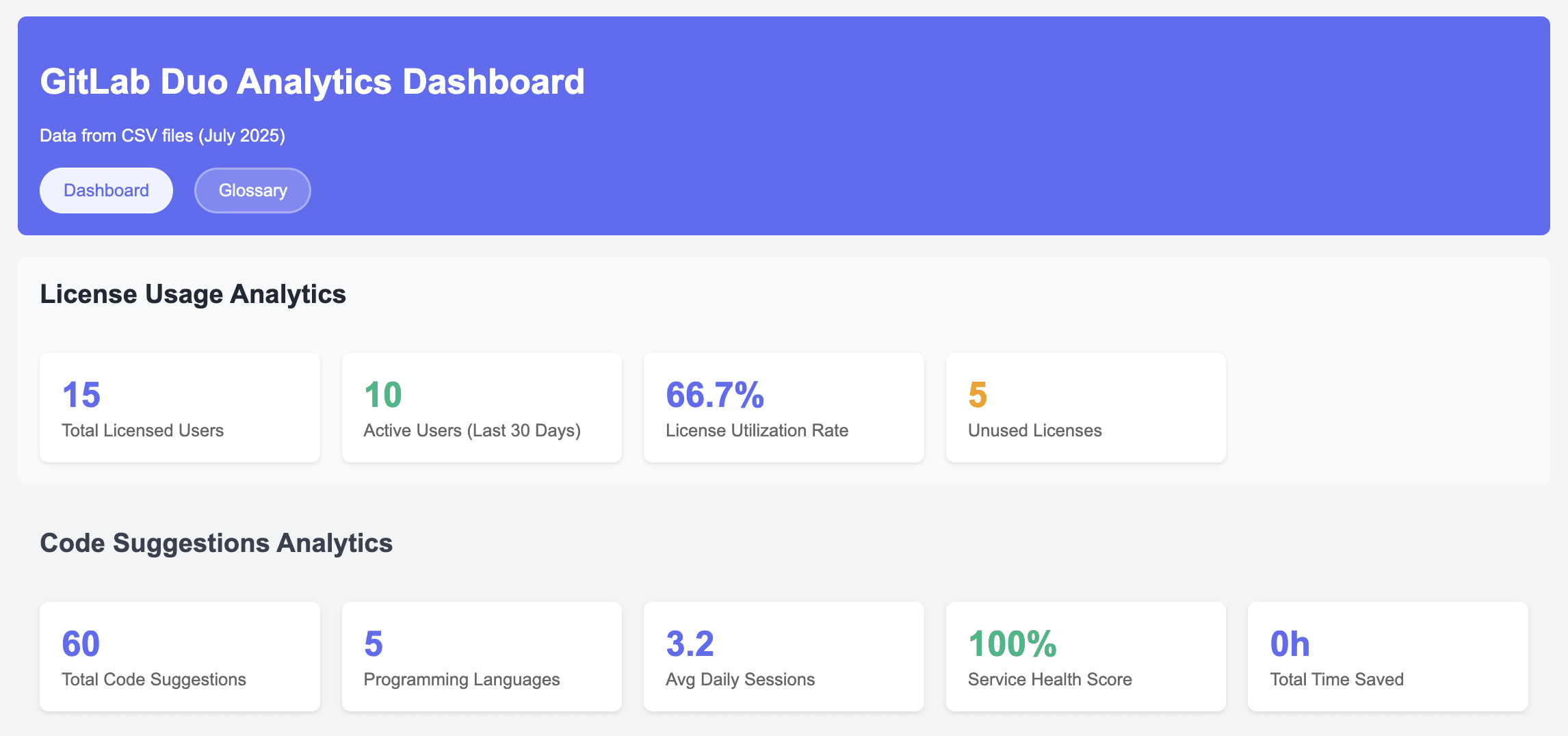

You'll see:

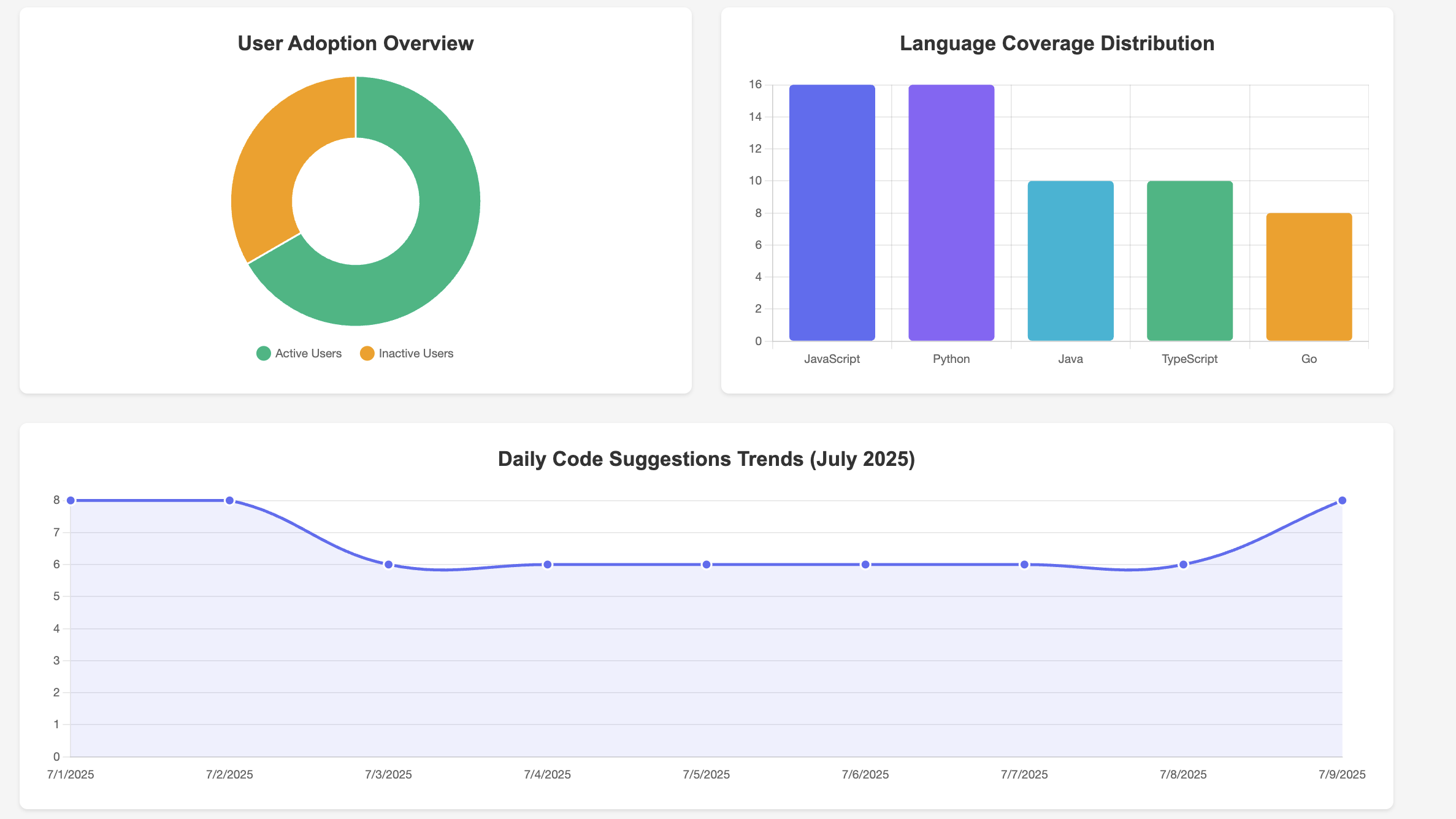

- License utilization: Total licensed users vs. active users (together with code suggestion analytics)

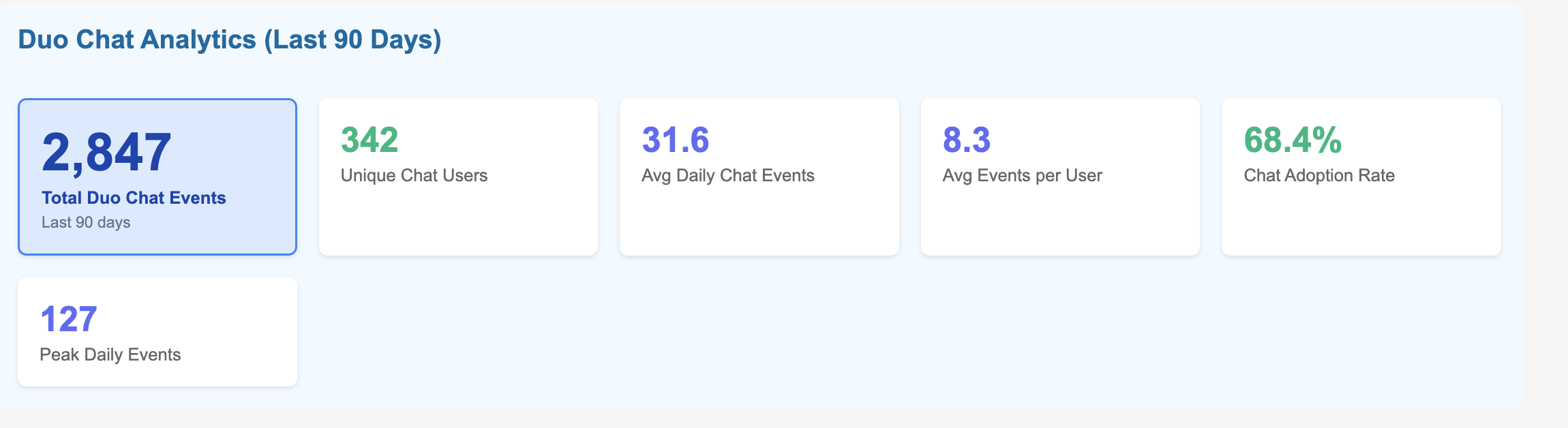

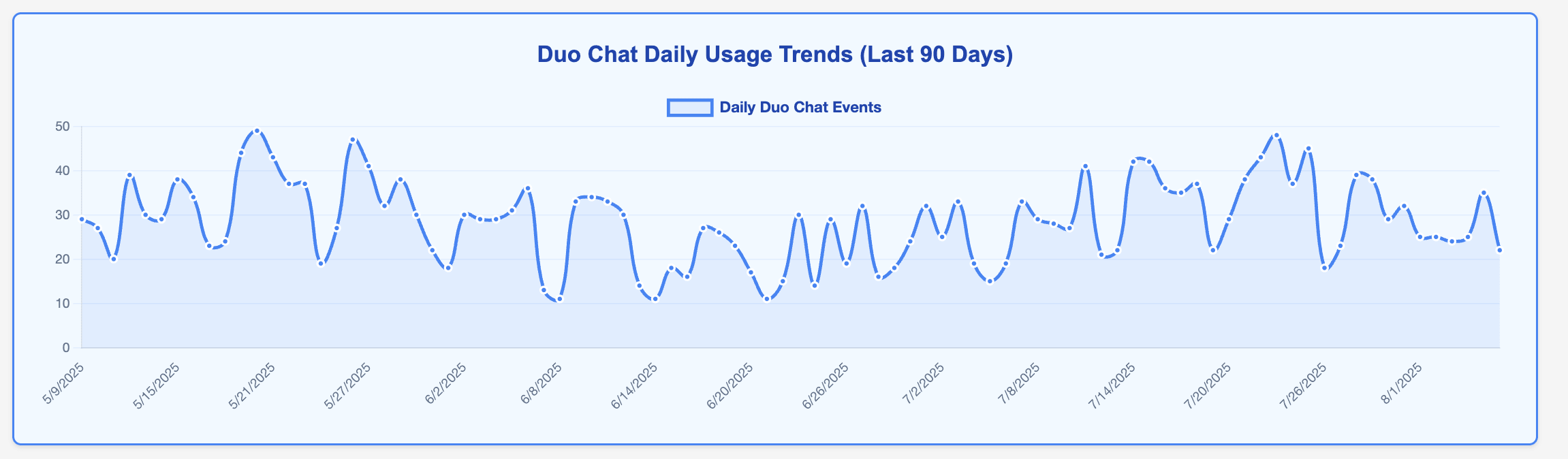

- GitLab Duo Chat analytics: Unique Duo Chat users, average Chat events over 90 days, and Chat adoption rate

- GitLab Duo engagement analytics: Categorizing Duo usage for a group of users as Power (10+ suggestions), Regular (5-9), or Light (1-4) based on usage patterns

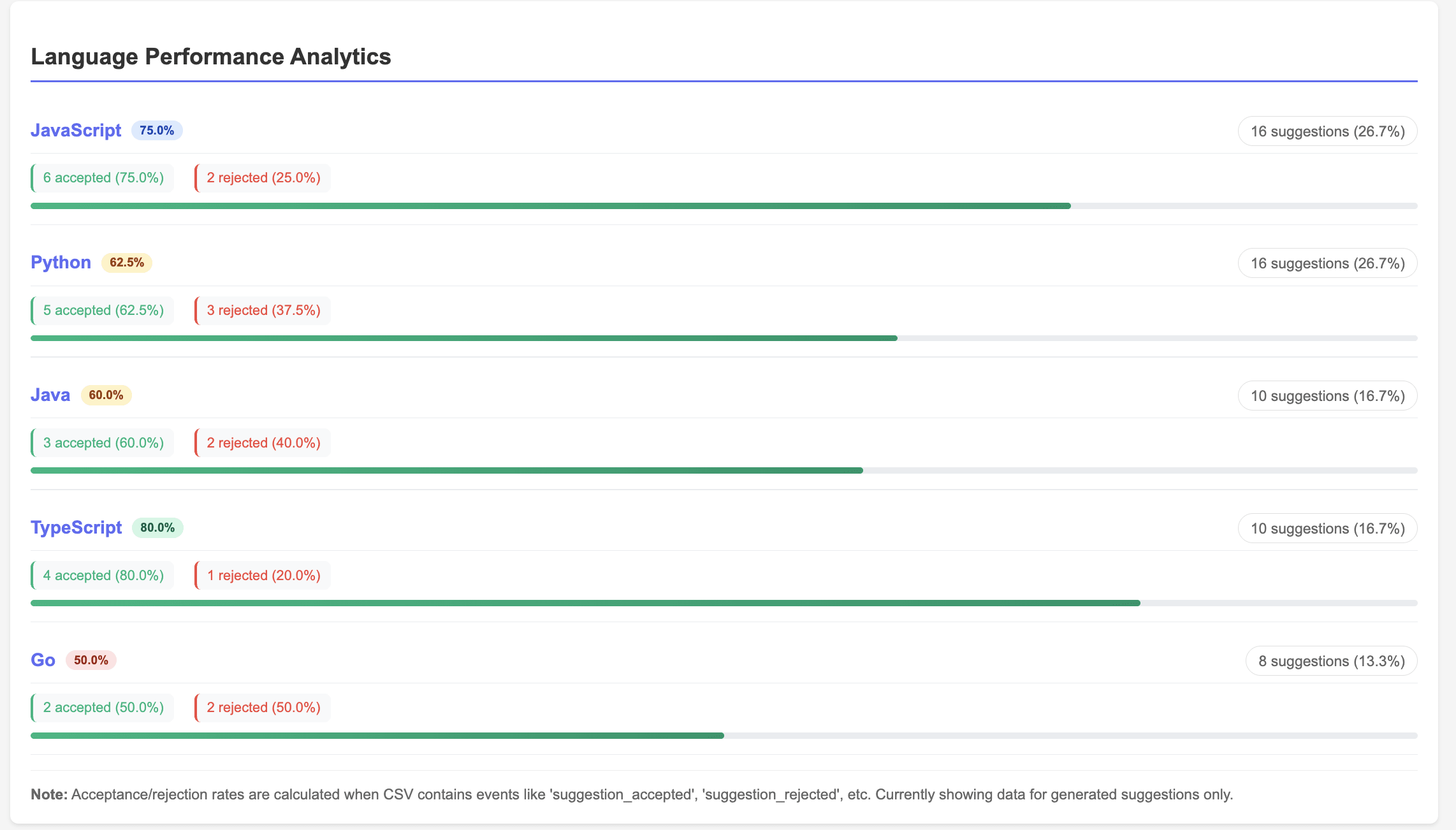

- Usage analytics: Code suggestions by programming language (language coverage distribution), Code suggestions language performance analytics (accepted vs rejected rate)

- Weekly Duo Chat trends: Duo Chat usage patterns

Dashboard features include:

- License Usage Analytics - Track licensed vs active users

- Code Suggestions Analytics - Monitor acceptance rates and language distribution

- Duo Chat Analytics - View chat interactions and adoption rates

- User Engagement Analytics - Categorize users by activity level

- Language Performance Analytics - Analyze acceptance rates by programming language

7. Understanding the APIs used

The solution leverages multiple GitLab APIs to collect comprehensive usage data:

AI usage data API (aiUsageData)

# Fetches individual code suggestion events

query: |

{

group(fullPath: "your-group") {

aiUsageData {

codeSuggestionEvents {

event # ACCEPTED or SHOWN

timestamp # When it happened

language # Programming language

suggestionSize # SINGLE_LINE or MULTI_LINE

user { username }

}

}

}

}

# Purpose: Tracks every code suggestion shown or accepted by developers

GitLab Self-Managed add-on users API

# Gets licensed user information

query: |

{

selfManagedAddOnEligibleUsers(

addOnType: DUO_ENTERPRISE

filterByAssignedSeat: "Yes"

) {

user {

username

lastDuoActivityOn

}

}

}

# Purpose: Identifies who has licenses and when they last used Duo

AI metrics API

query: |

{

aiMetrics(from: "2024-01-01", to: "2024-06-30") {

codeSuggestions {

shownCount

acceptedCount

}

duoChatContributorsCount

duoAssignedUsersCount

}

}

# Purpose: Gets pre-calculated metrics for trend analysis

Service Ping API (REST)

url: "{GITLAB_URL}/api/v4/usage_data/service_ping"

# Purpose: Collects instance-wide usage statistics

Putting it all together

To demonstrate the power of this integrated analytics solution, let's walk through a complete end-to-end implementation journey — from initial deployment to fully automated ROI measurement.

Start by deploying the containerized solution in your environment using the provided Docker configuration. Within minutes, you'll have both the analytics API and React dashboard running locally.

The hybrid data architecture approach immediately begins collecting metrics from your existing monthly CSV exports while establishing real-time GraphQL connections to your GitLab instance.

Automation through Python scripting

The real power emerges when you leverage Python scripting to automate the entire data collection and processing workflow. The solution includes comprehensive Python scripts that can be easily customized and scheduled.

GitLab CI/CD integration

For enterprise-scale automation, integrate these Python scripts into scheduled GitLab CI/CD pipelines. This approach leverages your existing GitLab infrastructure while ensuring consistent, reliable data collection:

# .gitlab-ci.yml example

duo_analytics_collection:

stage: analytics

script:

- python scripts/enhanced_duo_data_collection.py

- python scripts/metric_aggregations.py

- ./deploy_dashboard_updates.sh

schedule:

- cron: "0 2 1 * *" # Monthly on 1st at 2 AM

only:

- schedules

This automation strategy transforms manual data collection into a self-sustaining analytics engine. Your Python scripts execute monthly via GitLab pipelines, automatically collecting usage data, calculating ROI metrics, and updating dashboards — all without manual intervention.

Once automated, the solution operates seamlessly: Scheduled pipelines execute Python data collection scripts, process GraphQL responses into business metrics, and update dashboard data stores. You can watch as the dashboard populates with real usage patterns: code suggestion volumes by programming language, user adoption trends across teams, and license utilization rates that reveal optimization opportunities.

The real value emerges when you access the ROI Overview dashboard. Here, you'll see concrete enagagement metrics metrics which can be converted into business impact for your organisation — perhaps discovering that your active Duo users are generating 127% monthly ROI through time savings and productivity gains, while 23% of your licenses remain underutilized. These insights immediately translate into actionable recommendations: expand licenses to high-performing teams, implement targeted training for underutilized users, and build data-driven business cases for broader AI adoption.

Why GitLab?

GitLab's comprehensive DevSecOps platform provides the ideal baseline for enterprise AI analytics and measurement. With native GraphQL APIs, flexible data access, and integrated AI capabilities through GitLab Duo, organizations can centralize AI measurement across the entire development lifecycle without disrupting existing workflows.

The solution's open architecture enables custom analytics solutions like the one developed through our Duo Accelerator program. GitLab's commitment to API-first design means you can extract detailed usage data, integrate with existing enterprise systems, and build sophisticated ROI calculations that align with your organization's specific metrics and reporting requirements.

Beyond technical capabilities, our approach ensures you're not just implementing tools — you're building sustainable AI adoption strategies. This purpose built solution emerging from the Duo Accelerator program exemplifies this approach, providing hands-on guidance, proven frameworks, and custom solutions that address real enterprise challenges like ROI measurement and license optimization.

As GitLab continues enhancing native analytics capabilities, this foundation becomes even more valuable. The measurement frameworks, KPIs, and data collection processes established through custom analytics solutions seamlessly transition to enhanced native features, ensuring your investment in AI measurement grows with GitLab's evolving solution.

Try GitLab Duo today

AI ROI measurement is just the beginning. With GitLab Duo's capabilities you can build out comprehensive analytics. With this you're not just tracking AI usage — you're building a foundation for data-driven AI optimization that scales with your organization's growth and evolves with GitLab's expanding AI capabilities.

The analytics solution developed through GitLab's Duo Accelerator program demonstrates how customer success partnerships can deliver immediate value while establishing long-term strategic advantages. From initial deployment to enterprise-scale ROI measurement, this solution provides the visibility and insights needed to maximize AI investments and drive sustainable adoption.

The combination of Python automation, GitLab CI/CD integration, and purpose-built analytics creates a competitive advantage that extends far beyond individual developer productivity. It enables strategic decision-making, optimizes resource allocation, and builds compelling business cases for continued AI investment and expansion.

The future of AI-powered development is data-driven, and it starts with measurement. Whether you're beginning your AI journey or optimizing existing investments, GitLab provides both the platform and the partnership needed to succeed.

Get started with GitLab Duo today with a free trial of GitLab Ultimate with Duo Enterprise.