Published on: May 30, 2024

7 min read

Developing GitLab Duo: Secure and thoroughly test AI-generated code

Learn step-by-step how to enhance AI-generated code reliability and security using GitLab Duo and GitLab Pages (includes code samples and prompts).

Generative AI marks a monumental shift in the software development industry, making it easier to develop, secure, and operate software. Our new blog series, written by our product and engineering teams, gives you an inside look at how we create, test, and deploy the AI features you need integrated throughout the enterprise. Get to know new capabilities within GitLab Duo and how they will help DevSecOps teams deliver better results for customers.

As AI becomes increasingly integral to software development, ensuring the security and thorough testing of AI-generated code is paramount. This article is a step-by-step guide to combining GitLab Duo, our suite of AI features to powering the DevSecOps workflow, and GitLab Pages to secure and thoroughly test AI-generated code. You'll discover how to mitigate common risks, including how to automatically generate tests, test code, and deploy test reports – all to enhance the reliability of your AI-generated code.

Discover the future of AI-driven software development with our GitLab 17 virtual launch event. Watch today!

Challenges in AI-generated code

AI-generated code often faces issues such as:

- Mismatched algorithms: Incorrect or suboptimal algorithms can be generated.

- Dependency problems: AI may include dependencies that are outdated or incompatible.

- Security vulnerabilities: AI might generate code with potential security flaws.

AI-generated code often faces issues such as mismatched algorithms, dependency problems, and security vulnerabilities. A recent study published by the Association of Computing Machinery on ChatGPT’s responses to programming questions found that 52% of the answers contained incorrect information, and 77% were overly verbose. Despite these shortcomings, users preferred ChatGPT’s comprehensive and well-articulated answers 35% of the time, even overlooking misinformation 39% of the time. Addressing these challenges requires the use of advanced tools and frameworks.

GitLab’s approach to AI security and testing

GitLab has a comprehensive content strategy focused on integrating security measures within the development workflow. By leveraging GitLab Duo for AI-powered code generation and GitLab Pages for embedding test reports, developers can ensure their AI-generated code is both secure and reliable.

Here is a step-by-step guide to pair GitLab Duo and GitLab Pages to ensure secure and thoroughly tested AI-generated code by implementing a Flask web server.

1. Create a new project on GitLab.com

- Go to GitLab.com.

- Click on the "New project" button.

- Choose "Create blank project".

- Enter a project name (e.g., AI_Code_Security).

- Set the visibility level (Public, Internal, or Private).

- Click "Create project".

2. Enable GitLab Duo Code Suggestions

- Navigate to your project.

- Click on the "Web IDE" button to open the Web IDE.

- Ensure that GitLab Duo features like Code Suggestions and Duo Chat are enabled.

- Start coding in the Web IDE. As you type, GitLab Duo will provide code suggestions to help you write code more efficiently.

3. Create a Flask web server

You can create a Flask web server using the comment (highlighted in green) in the screenshot below.

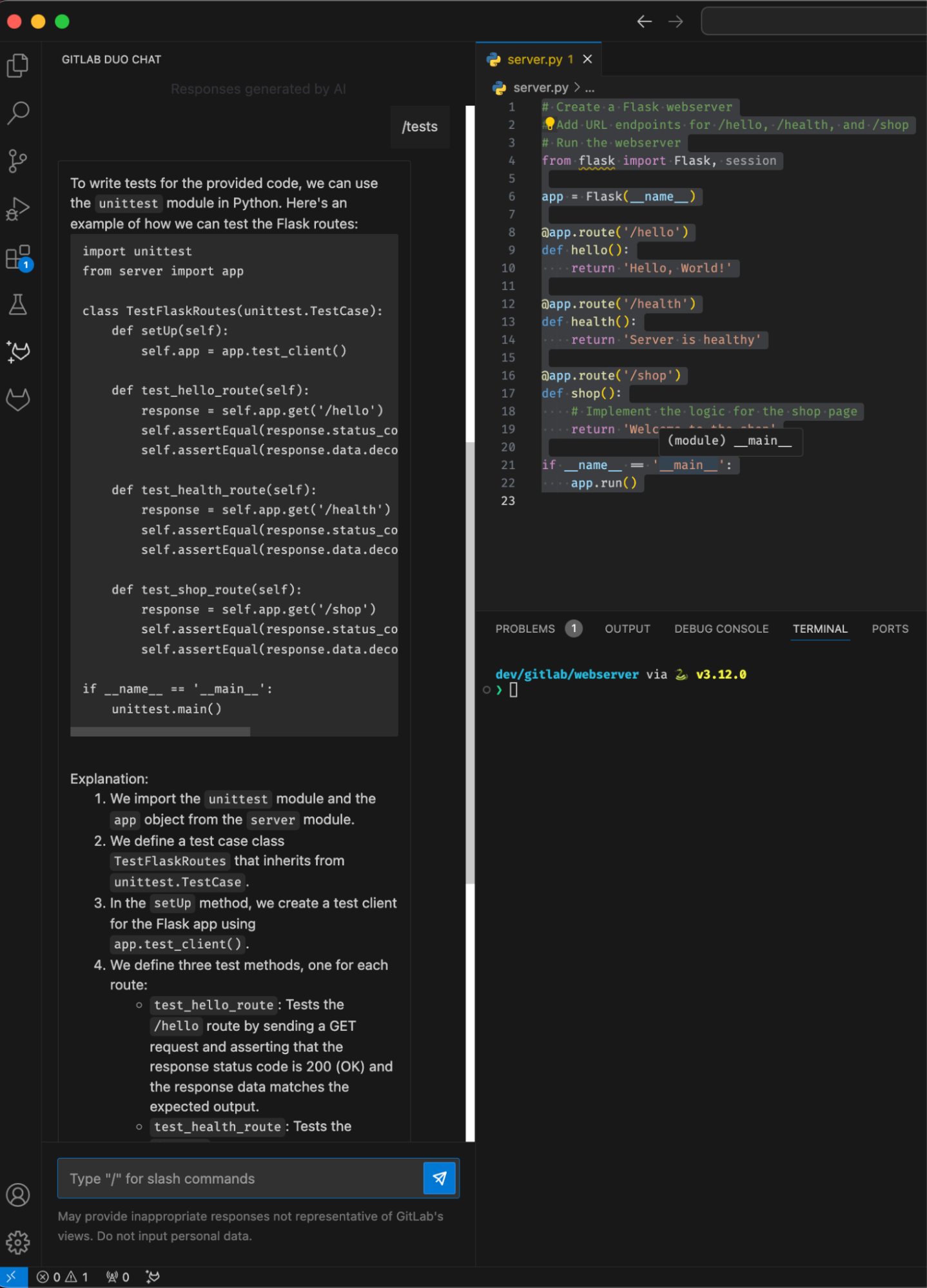

4. Generate tests with GitLab Duo

Unit tests are essential for validating the functionality of the generated code. Use GitLab Duo’s /tests command to generate testing suggestions directly in the Web IDE. This command can be tailored with additional instructions to focus on specific aspects such as performance, regression, or using particular frameworks.

Example usage in Web IDE:

- Select the code for which you want to generate tests.

- Use the command

/testsfollowed by additional instructions if needed.

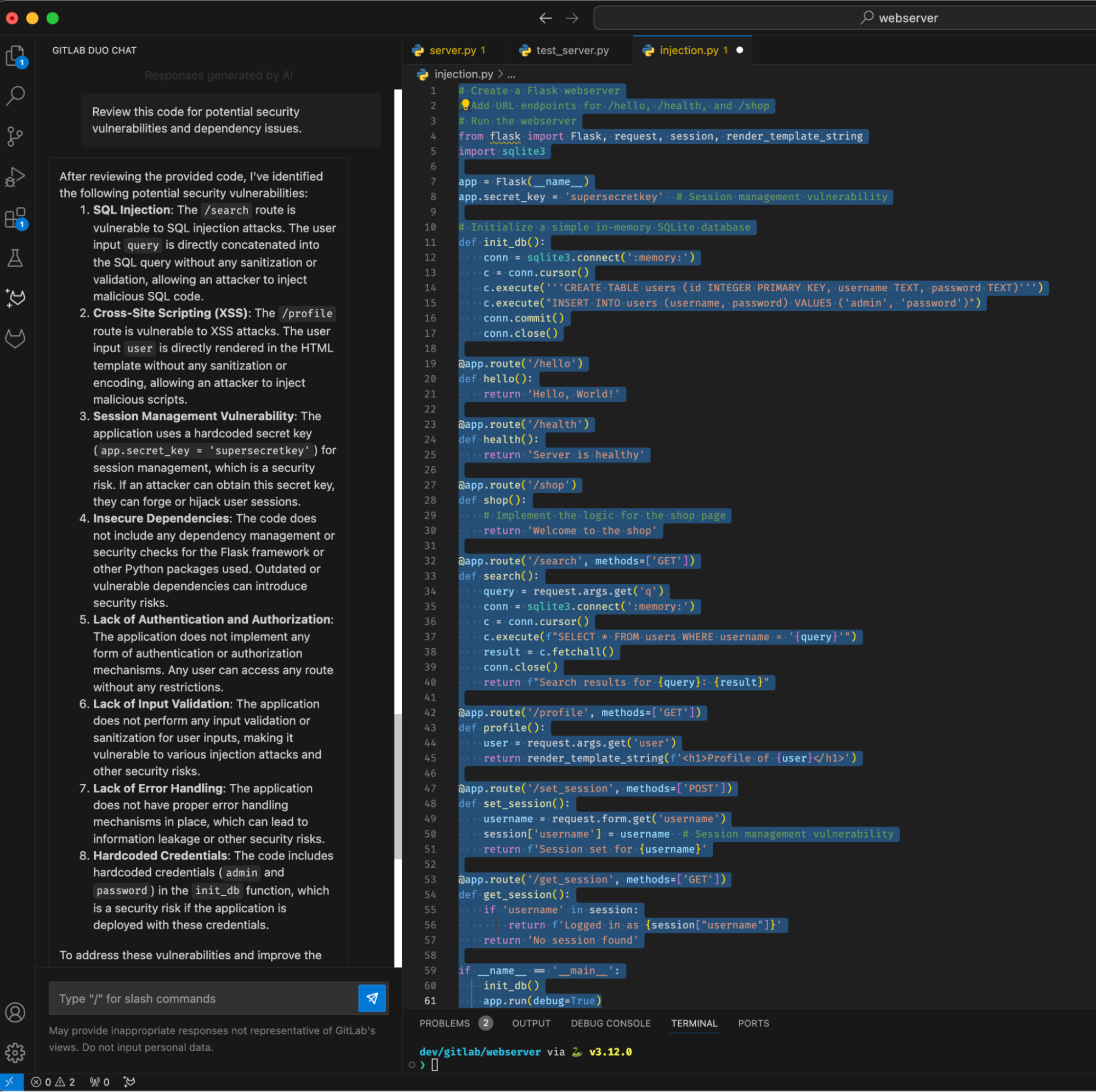

5. Identify issues with AI-generated code using GitLab Duo Chat

Use GitLab Duo Chat to review and refine AI-generated code. For instance, let's check our Flask web server code for security vulnerabilities:

Prompt: Review this code for potential security vulnerabilities and dependency issues.

GitLab Duo Chat can help identify the vulnerabilities in the above code.

6. Generate test reports

After running your tests, generate a test report that will be deployed using GitLab Pages.

Prompt: Write me a python script to generate a test report that will be deployed using

GitLab Pages.

What's happening here?

- The script ensures the test_reports directory exists.

- It runs the

test_server.pyfile usingsubprocess.run(), capturing the output. - The raw output is saved to

test_reports/test_output.txt. - An HTML report is generated, embedding the test output within

<pre>tags for readability, and saved astest_reports/index.html.

7. Deploy the test report with GitLab Pages

Use GitLab Pages to host and display the test report. Here’s the configuration for our .gitlab-ci.yml file to deploy the test report.

stages:

- test

- deploy

test_job:

stage: test

script:

- python generate_test_report.py

artifacts:

paths:

- test_reports/

pages:

stage: deploy

script:

- mv test_reports public

artifacts:

paths:

- public

With this setup, the test_job stage runs the Python script to generate the test report. The pages stage moves the test_reports directory to public, which GitLab Pages uses to serve the content.

8. Embedding test reports in MR widgets

Embedding the test reports in MR widgets provides immediate visibility into the testing outcomes, ensuring transparency and reliability. This can be done by including the test report as an artifact in your CI/CD pipeline configuration:

stages:

- build

- test

- deploy

build_job:

stage: build

script:

- echo "Building the project..."

- # Your build commands here

test_job:

stage: test

script:

- mkdir -p test-reports

- python test_server.py > test-reports/results.xml

artifacts:

when: always

reports:

junit: test-reports/results.xml

paths:

- test-reports/results.xml

pages:

stage: deploy

script:

- mkdir .public

- mv test-reports .public/

artifacts:

paths:

- .public

By including the test report as an artifact and specifying it in the reports section, GitLab will automatically display the test results in the MR widgets, providing immediate visibility into the testing outcomes and enhancing transparency and reliability.

Case study: AI reliability with security policies and scanners

Imagine a scenario where an AI-generated code snippet introduces a dependency that has known vulnerabilities. By using GitLab Duo and its security policies, this dependency would be flagged during the code generation process. Let’s consider an example where a snippet was generated by AI:

import os

from flask import Flask, request

app = Flask(__name__)

@app.route('/search')

def search():

query = request.args.get('query')

execute_os_command(query)

return 'You searched for: ' + query

def execute_os_command(command):

os.system(command)

if __name__ == '__main__':

app.run()

In this example, the search endpoint is vulnerable to OS command injection. By leveraging GitLab's Static Application Security Testing (SAST) component, this vulnerability would be detected during the CI/CD pipeline.

Integrate SAST scanning to detect vulnerabilities

GitLab SAST automatically analyzes your code for security vulnerabilities. Here’s how it can be integrated into your .gitlab-ci.yml file to scan for issues:

stages:

- build

- test

- sast

- deploy

build_job:

stage: build

script:

- echo "Building the project..."

- # Your build commands here

test_job:

stage: test

script:

- python test_server.py > test-reports/results.xml

artifacts:

when: always

reports:

junit: test-reports/results.xml

paths:

- test-reports/results.xml

sast_job:

stage: sast

script:

- echo "Running SAST..."

artifacts:

reports:

sast: gl-sast-report.json

only:

- branches

pages:

stage: deploy

script:

- mv test-reports public

artifacts:

paths:

- public

In this configuration, the sast_job stage runs SAST to detect vulnerabilities in the code, producing a report (gl-sast-report.json) that will be included in the pipeline artifacts! By integrating security policies and robust testing frameworks, GitLab Duo helps customers ensure that their AI-generated code is both efficient and secure.

Get started today

The integration of AI in software development brings significant benefits but also introduces new challenges. By using tools like GitLab Duo and GitLab Pages, developers can ensure that their AI-generated code is secure, well-tested, and reliable. Explore these tools today and join the conversation on enhancing AI security and testing!

Start a trial of GitLab Ultimate today to access GitLab Duo and GitLab Pages.

Read more of the "Developing GitLab Duo" series

We want to hear from you

Enjoyed reading this blog post or have questions or feedback? Share your thoughts by creating a new topic in the GitLab community forum.

Share your feedback