Published on: August 26, 2024

18 min read

How to choose the right security scanning approach

GitLab offers multiple scanning methods for CI/CD pipelines, including compliance frameworks and scan and pipeline execution policies. Learn the basics, configurations, and advantages/disadvantages.

Integrating security scans into your CI/CD pipeline is crucial for maintaining robust and secure applications. But who's responsible for those scans? Who is responsible for adding them into every CI/CD pipeline for all projects? And who decides which identified vulnerability may pass or needs fixing? For organizations in regulated industries, these are critical questions.

In this article, you'll learn how GitLab CI/CD enables each person in the software development lifecycle to incorporate security scanning. You'll also discover the advantages and disadvantages of the various options available to add scanning to GitLab project pipelines. Code examples will help you kickstart security scanning on the GitLab DevSecOps platform.

Article contents:

- The basics of setting up security scanning

- Pipeline includes

- Compliance frameworks

- Policies

- Get started with security scanning

The basics of setting up security scanning

GitLab uses fictional personas to describe the individual team member who would typically use a given security feature or approach. By exploring the perspective of a Software Developer (Sasha), Application Security Engineer (Amy), or Platform Engineer (Priyanka), you can better understand the needs of each role on your team.

GitLab follows a "pipeline-per-project" principle, stored in the file named .gitlab-ci.yml. This file contains the project's CI/CD pipeline definition and is revision controlled like any other file in the project. You'll learn about these project pipelines, as well as compliance pipelines and policy pipelines. While compliance pipelines and policy pipelines also refer to the YAML files in GitLab projects, they typically have a different file name and serve a different purpose.

Readers already familiar with security scanning in GitLab will find clarity in the security pipeline choices available in the context of your team/organization. Therefore, we will discuss each of the approaches with respect to the following criteria:

-

Ease of use: How easy is it to add security scanning to project pipelines? Is it a reasonable task for Sasha, or something that Amy and Priyanka should handle?

-

Customization: How deeply can scanner configurations be customized using that approach? While default configurations that make sense and cover a wide range of customer needs are worth gold, the time often comes when scanner configurations need adjustments.

-

Enforcement: Is this approach suitable to companies operating in regulated industries or that otherwise have global policies in place? Can we ensure each relevant project runs Scanner X with Configuration Y?

Pipeline includes

GitLab project pipeline includes are a mechanism that allows the integration of external pipelines into the .gitlab-ci.yaml project pipeline. This is similar to including a library in many programming languages. This powerful feature enables the seamless incorporation of your own templates, as well as GitLab-provided templates, to be used as building blocks for your pipelines. Includes can be used in project pipelines or other pipeline files. An example of a commonly included external pipeline is including a security scanning pipeline into a GitLab project pipeline.

Here are the common types of includes, which use the security scanner example.

Templates

GitLab offers ready-to-use templates that can be included in a project pipeline to make it easier for teams to add in various pre-built elements. The following is example code:

include:

- template: Jobs/Secret-Detection.gitlab-ci.yml

- template: Jobs/SAST.gitlab-ci.yml

- template: Jobs/Dependency-Scanning.gitlab-ci.yml

- template: Jobs/Container-Scanning.gitlab-ci.yml

This code includes GitLab's templates for Secret Detection, Static Application Security Testing, Dependency Scanning, and Container Scanning – all in only five lines of code.

To modify the behavior of jobs included via templates, you can either use variables or use GitLab's property merging capabilities.

You will find an example of modifying the GitLab Container Scanning pipeline using variables below. The template for Container Scanning needs to know the location of the image and uses a variable named CS_IMAGE for that as is documented in the template code linked above.

variables:

CS_IMAGE: "$CI_REGISTRY_IMAGE:$CI_COMMIT_SHORT_SHA"

include:

- template: Jobs/Container-Scanning.gitlab-ci.yml

The project pipeline variables are available to included job templates by defining the CS_IMAGE variable before the included pipeline template. The Container Scanning template inherits the CS_IMAGE variable value.

If we wanted to make changes to the allow_failure property defined here, we would need to resort to property merging since the job templates employ no variable for the value. (The allow_failure property is a property generally available on every GitLab pipeline job. Please check the documentation for details.)

In this example, allow_failure is set to false, meaning the entire pipeline stops on a container scanning failure. This stops any unscanned containers from moving forward in the pipeline.

include:

# Includes a job called "container_scanning"

- template: Jobs/Container-Scanning.gitlab-ci.yml

# Define a job with same name for merging

container_scanning:

allow_failure: false

GitLab will load the job template and – as defined in the template code – register a job called container_scanning. As the pipeline definition declares another job with that name, GitLab will merge that specification with the already registered job.

While this feature offers many possibilities, it also makes it impossible to protect certain properties from being overwritten. We are only at the point of modifying the project pipeline, so there's no control over that anyway. But later on, you will see that this can pose a challenge when security needs to be enforced on a project.

Components

Templates are a great start for sharing repeatable GitLab pipelines. To further abstract reusable code across an entire organization or a GitLab instance, GitLab introduced components. Components are the next logical step in GitLab's evolution of pipelines. Components are designed to simplify the creation and use of functional building blocks to use in pipelines, or even to package and ship entire pipelines if needed. They offer a well-defined interface, which accepts "inputs" for configuration. Otherwise, the component is completely isolated, which makes them a great candidate to share work within an organization and to be searchable and reusable building blocks.

Developers can use the CI/CD Catalog to browse and search the collection of publicly available GitLab components, which are components officially built and maintained by GitLab. GitLab uses the CI/CD Catalog to publish our shipped components such as security scanners alongside community-provided components.

Components are consumed similarly to templates via the include keyword. In an example above, we showed how the container scanning job requires knowledge of the image location. This "input" uses the component for container scanning is called cs_image. The configuration equivalent to the previous example looks like this:

include:

- component: $CI_SERVER_FQDN/components/sast/[email protected]

- component: $CI_SERVER_FQDN/components/dependency-scanning/[email protected]

- component: $CI_SERVER_FQDN/components/secret-detection/[email protected]

- component: $CI_SERVER_FQDN/components/container-scanning/[email protected]

inputs:

cs_image: "$CI_REGISTRY_IMAGE:$CI_COMMIT_SHORT_SHA"

In this example, the SAST component is pinned at Version 2.0.2, the Dependency Scanning component at Version 0.2.0, the Secret Detection component at Version 1.1.2, and the Container Scanning component at Version 4.1.0. ~latest and more tags are available for bleeding-edge component usage and other development needs.

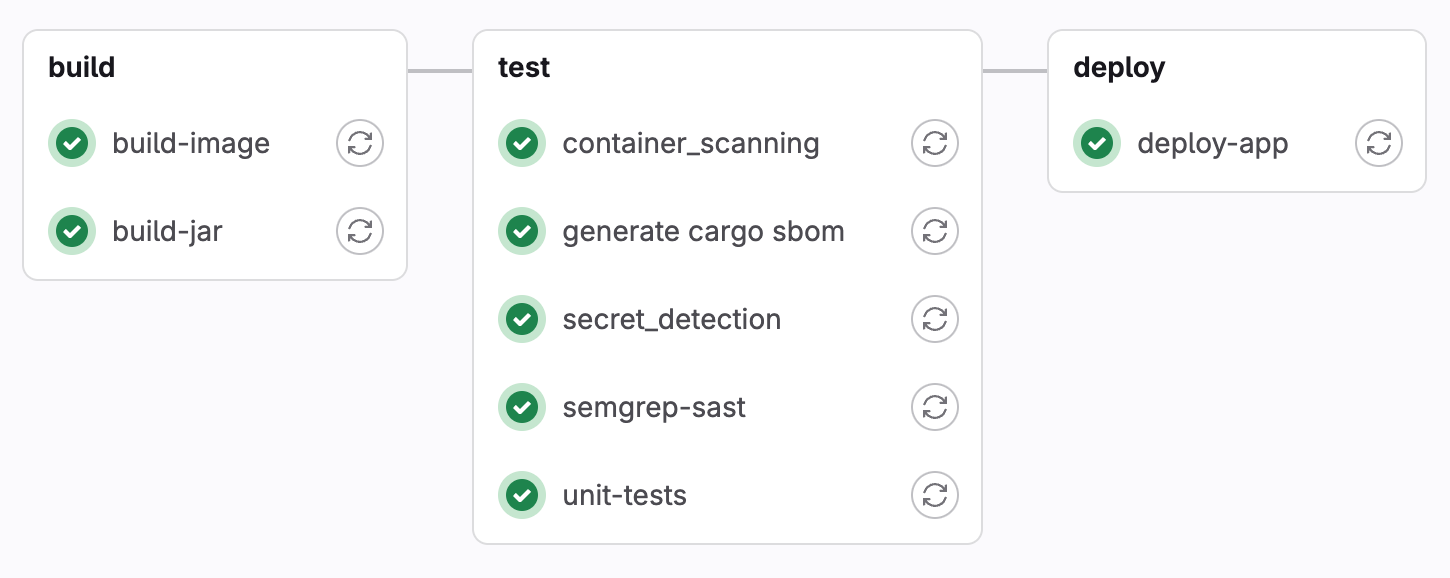

Whether you use templates or components, your pipeline might look like the image below. The top four jobs in the test stage are the result of the four include statements in the code above.

Advantages and disadvantages of using pipeline includes

Ease of use

One of the benefits of using pipeline includes in GitLab is their ease of use. We have seen how, with essentially six lines of code, we included four commonly used security scanners. All the complex logic and setup are handled within the templates or components, saving Sacha time and effort by providing a ready-to-use solution.

Customization

While templates offer the highest flexibility (variables and merging), it's important to remember that with "great power comes great responsibility." The flexibility of templates supports extensive customization, but requires careful management and oversight to avoid unexpected results.

In contrast, components provide a more structured mechanism for authoring, sharing, and maintaining building blocks for a broader audience. Components, while not as customizable, enhance stability and reliability, and are a valuable, reusable, and repeatable feature.

Enforcement

As the name include suggests, it is the GitLab project pipeline that needs to include templates or components. While scanner templates are straightforward to use, Amy and Priyanka cannot be sure Sacha has included them properly, or even at all. Enforcement of scanner usage is needed.

For regulated industries, managing security in project pipelines is not an approach that provides the necessary audit trail or enforcement.

Compliance frameworks

GitLab identified the gap between the ability to enforce security scans on project pipelines and the need to adhere to regulatory compliance frameworks such as PCI DSS, NIST, and many more. The introduction of compliance frameworks as functionality caters to precisely this challenge.

At first glance, a compliance framework in GitLab is merely a label attached to a project, which would typically be named after the regulatory framework it is supposed to implement. The magic is added with the link between that label and a compliance pipeline YAML file, which is responsible for implementing the necessary steps to ensure compliance.

The mechanism is straightforward: Every time the project pipeline is triggered, GitLab executes the compliance pipeline instead. The compliance pipeline runs with both the CI/CD variables and predefined CI/CD variables of the project pipeline.

This allows for two main design patterns: a "wrapping pipeline," where the compliance pipeline includes the project pipeline, and an "overriding pipeline," where it does not.

Note: Compliance pipelines have been deprecated in GitLab Version 17.3 and are scheduled for removal in Version 19.0. At this point, we cannot recommend implementing this approach for new development platforms. However, you might already be using them, making it worth reading this section.

Wrapping pipelines

In the wrapping approach, the compliance pipeline defines its own jobs according to specific compliance needs. It includes the project pipeline in the same way we have seen templates included in the previous section. This setup is possible because the predefined CI/CD variables originate from the project pipeline, allowing the system to identify the pipeline definition's location for inclusion.

Here is an example of what a simple compliance pipeline might look like.

include:

- component: $CI_SERVER_FQDN/components/sast/[email protected]

- component: $CI_SERVER_FQDN/components/dependency-scanning/[email protected]

- component: $CI_SERVER_FQDN/components/secret-detection/[email protected]

- component: $CI_SERVER_FQDN/components/container-scanning/[email protected]

- project: '$CI_PROJECT_PATH'

file: '$CI_CONFIG_PATH'

ref: '$CI_COMMIT_SHA'

The last three lines include the project pipeline based on available variables.

Overriding pipelines

Unlike wrapping pipelines, which include the project pipeline, overriding pipelines ignore it entirely and run only their own jobs. This type of pipeline defines each step, encompassing all necessary jobs to build, test, and deploy the application.

Below we see a mock compliance pipeline that illustrates this approach.

stages: ["build", "test", "deploy"]

include:

- component: $CI_SERVER_FQDN/components/sast/[email protected]

- component: $CI_SERVER_FQDN/components/dependency-scanning/[email protected]

- component: $CI_SERVER_FQDN/components/secret-detection/[email protected]

- component: $CI_SERVER_FQDN/components/container-scanning/[email protected]

build-job:

stage: build

script: echo "Building the container image"

test-job:

stage: test

script: echo "Running unit tests"

deploy-job:

stage: deploy

script: echo "Deploying app"

Advantages and disadvantages of compliance frameworks

Ease of use

While compliance frameworks aren't terribly complicated, they aren't as straightforward and simple as pipeline includes. They're meant to be written and assigned to projects by Amy and Priyanka, who now need to interact with pipeline YAML code. A framework needs to be declared in the top-level namespace and compliance pipelines need to be created and maintained, and compliance frameworks need to be attached to the right projects.

Customization

Amy and Priyanka are the authors of compliance pipelines. Like Sacha in the previous section on includes, they have full control over what they include and how they include it, giving them maximum customizability of compliance jobs such as security scanners.

Enforcement

This aspect of enforcing pipelines questions whether developers can tamper with security jobs? In an environment with a strong separation of duties, this nuance requires some extra attention. To answer this, we need to look at each pattern separately:

Wrapping pipelines

As seen before, project pipelines are included in compliance pipelines. In addition to group- or project-level CI/CD variables, every element of that project pipeline must be considered a potential threat to the compliance pipeline. Obviously, variables and jobs stick out as primary candidates. And, in fact, they can and will influence security job behavior if used maliciously.

Here is a simple example to illustrate the issue.

Compliance pipeline:

include:

- template: Jobs/SAST.gitlab-ci.yml

- template: Jobs/Secret-Detection.gitlab-ci.yml

- project: '$CI_PROJECT_PATH'

file: '$CI_CONFIG_PATH'

ref: '$CI_COMMIT_SHA'

Project pipeline:

variables:

SECRET_DETECTION_DISABLED: true

semgrep-sast:

rules:

- when: never

This project pipeline declares a variable SECRET_DETECTION_DISABLED (this could be done via project or croup-level CI/CD variables, too), which is evaluated in the included secret detection template. Further, the last three lines use the merging mechanism discussed previously, to not execute the job at all. Kind of redundant, we know.

Both overrides could be prevented using components, but you get the idea. Components, too, are receptive to such attacks via their inputs' default values, which often use variables, too! Let's take a look at how this could be taken advantage of.

Compliance pipeline:

include:

- component: $CI_SERVER_FQDN/components/sast/[email protected]

- component: $CI_SERVER_FQDN/components/secret-detection/[email protected]

- project: '$CI_PROJECT_PATH'

file: '$CI_CONFIG_PATH'

ref: '$CI_COMMIT_SHA'

Project pipeline:

variables:

CI_TEMPLATE_REGISTRY_HOST: "docker.io"

To understand what is happening here, look at the SAST scanner component's Line 6:

spec:

inputs:

stage:

default: test

image_prefix:

default: "$CI_TEMPLATE_REGISTRY_HOST/security-products"

The image_prefix input uses the CI_TEMPLATE_REGISTRY_HOST to build the default value. By setting this variable to a false value in the same way we set SECRET_DETECTION_DISABLED to true before, Sacha may cause the job to load a wrong image and break SAST testing.

To prevent this override ability by the developer role, avoid templates in favor of components. This approach covers many developer-induced loopholes. To be certain of compliance, hardcode values for component inputs.

Overriding pipelines

This type is an entirely different beast. Developers get no chance of injecting actual pipeline code into the compliance pipeline. However, compliance pipelines do run with the project's CI/CD variables. Hence, any variable specified on the group- or project-level might modify the compliance pipeline's behavior. With SECRET_DETECTION_DISABLED set to true in the project CI/CD variables, the following compliance pipeline can be modified again:

stages: ["build", "test", "deploy"]

include:

- template: Jobs/SAST.gitlab-ci.yml

- template: Jobs/Secret-Detection.gitlab-ci.yml

build-job: ...

test-job: ...

deploy-job: ...

Components can solve this particular problem, but, as before, component inputs may use CI/CD variables developers can set. Compliance pipeline authors need to identify and take care of these situations.

Policies

Compliance pipelines' shortcomings have led to the next step for managing compliance: policies.

GitLab introduced policies as the way forward. Authors store a set of policies in a separate project as YAML files and apply them to projects on the group or project level. This gives Amy and Priyanka the flexibility to target individual projects with specific requirements but also to ensure compliance across the entire organization if needed. Access to the policy project can be controlled within the policy project and audited within GitLab.

Policies come in different types for different purposes. The types we are interested in right now are scan execution policies (SEP) and pipeline execution policies (PEP).

Scan execution policies

As the name suggests, SEPs require a particular scan – or set of scans – to be executed as part of the project pipeline and inject the respective scan jobs into the pipelines of associated projects. They include the respective template in the pipeline according to variables and rules set by Amy and Priyanka.

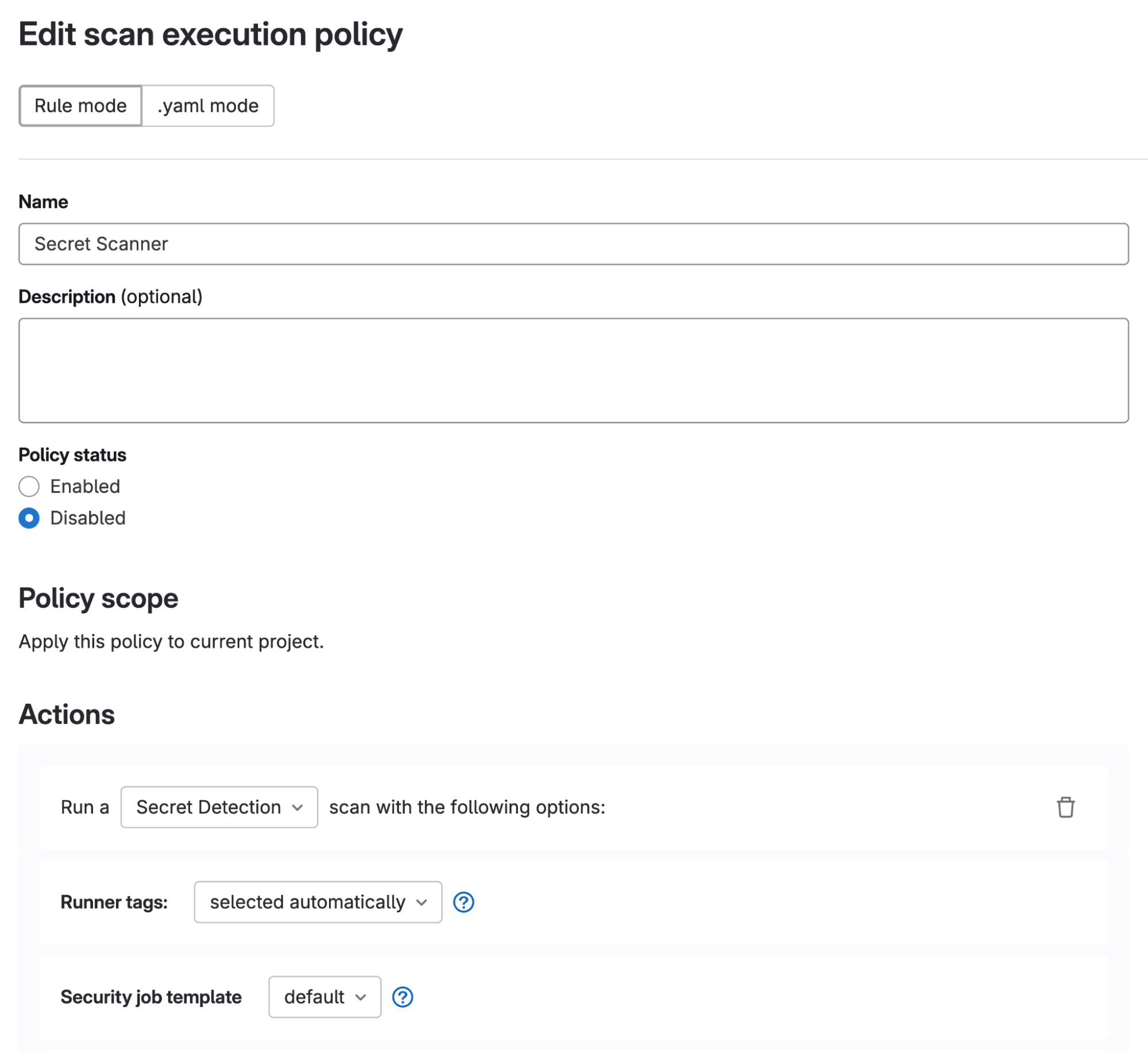

GitLab supports policy authors with a comprehensive user interface in addition to a YAML-based Git workflow. The following screenshot and code snippet illustrate a very basic example of a SEP:

name: Secret Scanner

description: ''

enabled: true

actions:

- scan: secret_detection

rules:

- type: pipeline

branches:

- "*"

For more details on SEP settings in the UI and YAML, please refer to the policy documentation.

Advantages and disadvantages of scan execution policies

Ease of use

SEPs provide a lightweight, easy-to-use mechanism that enforces security on existing and new CI/CD pipelines across the organization or on a granular level. The UI support makes them a viable tool for all relevant personas.

Customization

SEPs are restricted to predefined scanner jobs, and there is no option to extend this list with custom jobs at this point. This limitation can be restrictive for teams with unique scanning requirements that fall outside the standard options.

Enforcement

Once an SEP is applied to a project (directly or indirectly), Sacha has no way to get rid of that scan job. Though, there may be ways to – intentionally or not – manipulate the scan job's behavior.

Jobs injected via SEPs generally are receptive to CI/CD variables and adhere to the general rules of variable precedence. For this injection, Policies incorporate logic that denies changing some predefined variables as described here and generally deny the configuration of variables that follow certain patterns such as _DISABLED or _EXCLUDED_PATHS.

Despite these security measures, inconsiderate use of policies may still open opportunities for tampering: In my test, I was able to set a project-level CI/CD variable SECURE_ANALYZERS_PREFIX to a bad value (a non-existing location) and as you can see here, the secret detection template uses that to build the location of the scanner image.

While the scan job does get included in the pipeline run, it crashes very early and, therefore, provides no scan results. Due to the allow_failure: true configuration, the pipeline will continue to run and eventually execute a deploy job.

Because SEP variables take the highest variable precedence, there is an easy fix to reduce the attack surface of the policy: Simply hardcode the correct value in your policy YAML or via the UI:

- name: Secret Scanner

actions:

- scan: secret_detection

variables:

SECURE_ANALYZERS_PREFIX: registry.gitlab.com/security-products

Pipeline execution policies

SEPs enable the injection of a set of security-related jobs into any project pipeline. In contrast, PEPs apply entire pipeline configurations to projects, offering a lot more flexibility when it comes to customizing security constraints.

There are two methods for implementing these policies, known as "actions": inject and override. These actions function similarly to the patterns we have seen in the compliance frameworks section and provide flexible ways to enhance and enforce security standards within the development workflow.

Injecting pipelines

Injecting pipelines involves adding the jobs and other elements defined in the policy pipeline into the project pipeline. Currently, jobs should only be injected into reserved stages, namely .pipeline-policy-pre and .pipeline-policy-post to avoid unpredictable results.

GitLab handles name clashes between jobs or variables in policy and project pipelines effectively by building each pipeline in isolation before combining them. This ensures that the integration process is seamless and does not disrupt existing workflows or configurations.

The above screenshot shows an example of an injected policy pipeline. Project pipeline jobs are prefixed with prj- for easier identification.

Overriding pipelines

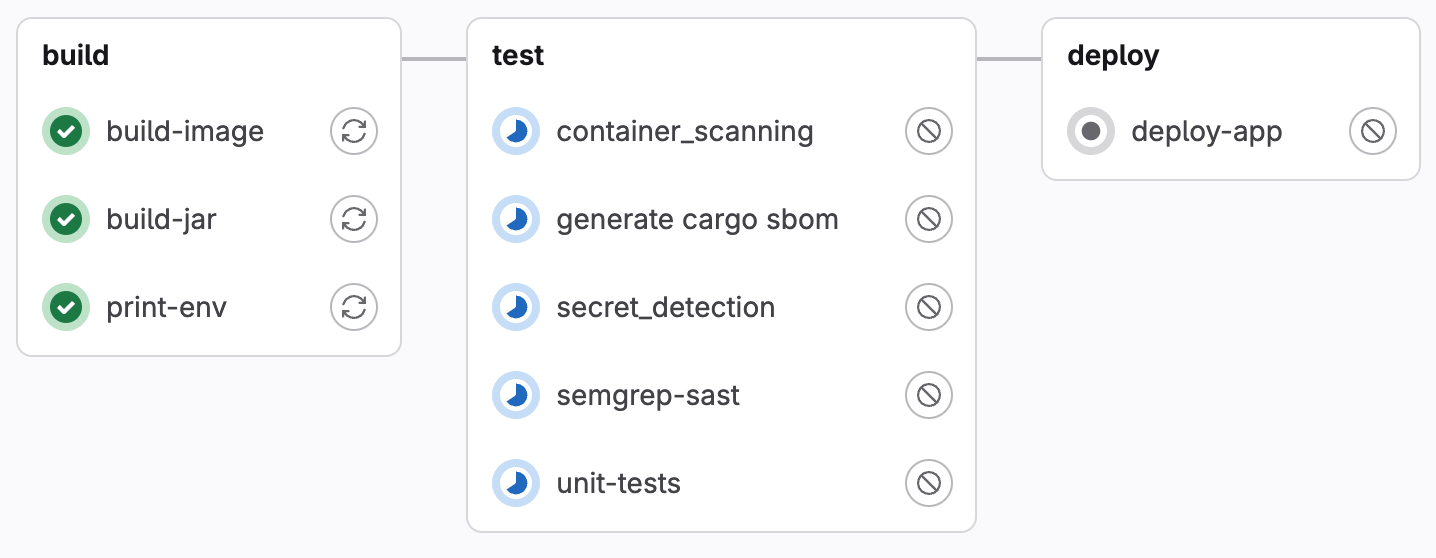

In the override approach, the project pipeline is completely replaced by the policy pipeline. This method is similar to compliance pipelines that do not include the project's .gitlab-ci.yml file. Despite the override, the pipelines run using the project's CI/CD variables, maintaining consistency with project-specific configurations. The compliance pipeline we used earlier makes a perfectly fine policy pipeline, too:

stages: ["build", "test", "deploy"]

include:

- component: $CI_SERVER_FQDN/components/sast/[email protected]

- component: $CI_SERVER_FQDN/components/dependency-scanning/[email protected]

- component: $CI_SERVER_FQDN/components/secret-detection/[email protected]

- component: $CI_SERVER_FQDN/components/container-scanning/[email protected]

build-job:

stage: build

script: echo "Building the container image"

test-job:

stage: test

script: echo "Running unit tests"

deploy-job:

stage: deploy

script: echo "Deploying app"

The image below shows a slightly more complete pipeline than the mock pipeline above:

Note: This doesn't currently work with SEPs.

However, the existence of a Dockerfile may not always be a valid indicator, as developers might be building without Dockerfiles using Cloud Native Buildpacks, Heroku Buildpacks, Kaniko, or other tools. Managed pipelines do not encounter this challenge, as they are more controlled and centralized.

Projects with multiple container images

For projects that produce multiple container images, several container scanning jobs would be necessary for proper coverage. This raises similar questions as before: "How do we know there are multiple?" and "Is the source of that information trustworthy?". If we wanted to rely on the existence of Dockerfiles a dynamic approach would be necessary that includes a container scanning job for each Dockerfile detected.

Get started with security scanning

In this article, you've learned about a variety of approaches to adding security scanning to CI/CD pipelines with a close look at ease of use, customizability, and the ability to strictly enforce scanning. You've seen that a pipeline author who is held responsible for project compliance needs to keep a few things in mind during the process to avoid surprises down the line. We recommend building a small testing space on your GitLab instance and then run a few tests to reproduce the main points of this article. Put yourself in the shoes of a malicious Sacha (Sachas aren't generally malicious people, but it's a good exercise) and think about how you could fool that annoying Amy and her security scans.

GitLab provides strong support for all sorts of requirements and all approaches are – at least in our eyes – easy to implement due the platform's baked-in functionality. You should find ways to bulletproof your scan jobs and, if not, you should open a ticket with our support.

Happy pipelining!

Get started with security scanning today!

Sign up for a free trial of GitLab Ultimate to implement security scanning in your software development lifecycle.